React SEO: Tips To Build SEO-Friendly Web Applications

Last Updated on December 10, 2024

Quick Summary:

SEO has become an integral part of any web application over the Internet. It not only commands the overall performance of a product or service in the market but also hampers the profits and effectiveness of any business owner directly or indirectly. Therefore, considering the same factor, when we talk about Search Engine Optimization or SEO, React websites face enormous challenges. One primary reason is that most React JS developers and development companies focus more on client-side rendering, whereas Google focuses on server-side rendering. This creates tremendous challenges for SEO in React. Moving forward with the blog post, let us first understand How Google Bot Crawls Webpages.

Of all online searches and search engines present, Google receives around 90% of its SEO, making it the most popular search engine for SEO-friendly websites. Before delving into how SEO React works, let’s look into Google’s crawling and indexing process.

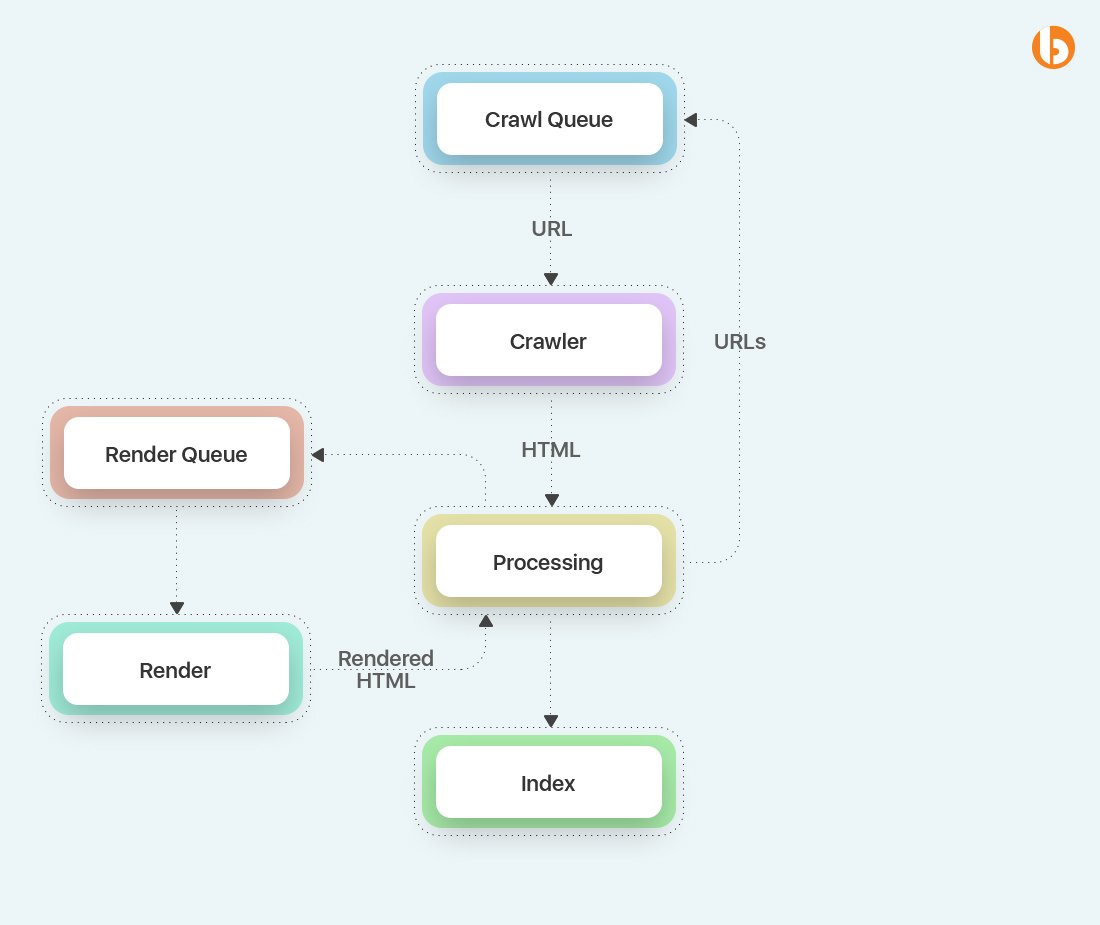

The below image is taken from Google Documentation.

Diagram of Googlebot indexing a site.

Note: This is a block diagram. The original Googlebot is quite complex.

Points to Remember:

Did you notice the clear difference between the Rendering stage executing JavaScript and the Processing stage parsing HTML? Well, this differentiation takes place based on cost. Executing JavaScript is costly as it needs to have a look at 130 trillion Webpages.

SEO of React-based websites is utterly crucial. Below are a few SEO Challenges that software engineers and developers can address and fix.

It is known that React applications depend on JavaScript. As a result, they struggle with Search engines. This happens due to the app shell model employed by React. Initially, HTML does not include meaningful content, so a bot or a user needs to execute JavaScript to view the page’s content. This approach denotes that Googlebot traces a void page during the initial pass. Thus, the content is viewed by Google only while the page gets rendered. Eventually, this leads to a delay in indexing within thousands of pages.

Meta tags are brilliant because they enable social media websites and Google to display valid thumbnails, titles, and descriptions for a particular page. But, these websites depend on the

tag of the collected webpage to obtain this information. This site does not perform JavaScript for the landing page. React renders every content, even the Meta tags. As the app shell remains the same for the app/website, it becomes difficult for individual pages to adapt the metadata.A sitemap is a file where all details about your site’s videos, pages, and other forks are provided, along with the relationship between them. As an intelligent search engine, Google reads this file to easily crawl into your site. React does not possess any inbuilt method to create sitemaps. If you use React Router to manage to route, you need to find tools to create a sitemap. Although, this might require some of your efforts.

No matter the task, Fetching, executing, and parsing JavaScript takes immense time. Furthermore, JavaScript may even require making network calls to collect the content while the user must wait to view the requested details. Regarding ranking criteria, Google has developed a set of web vitals regarding user experience. Extended loading times affect the user experience score, thus informing Google to rank the site lower.

Below is a few considerations that are linked to setting up excellent SEO practices.

If you want to improve your React application performance, then React.memo is a great solution to achieve the same!

Let us understand how and why optimizing React for SEO is challenging.

ReactJs is a lucrative choice for web development in 2025, but let us check out what challenges do React developers face when developing an SEO-friendly website.

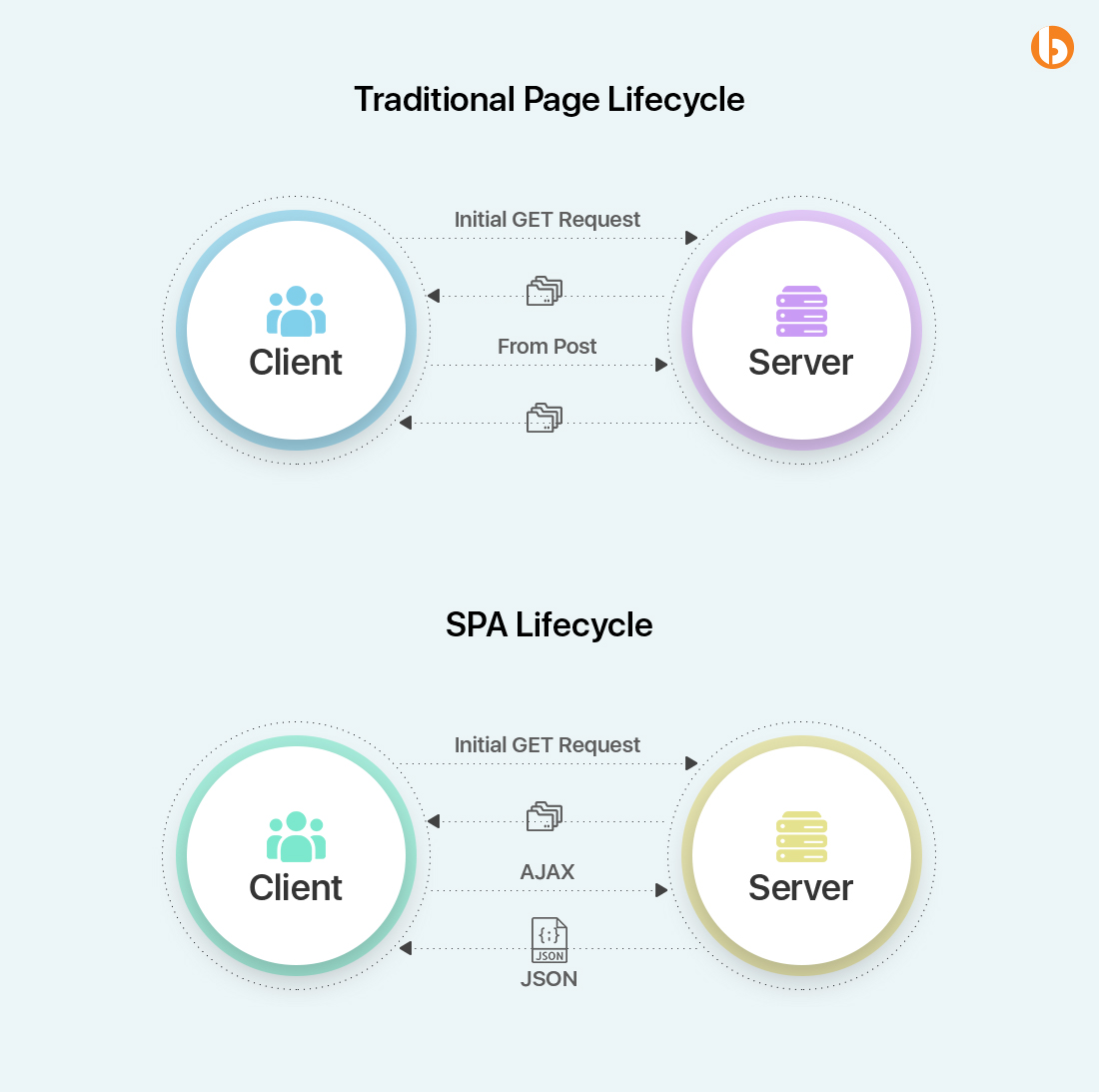

To help reduce the loading time issue, developers have developed a JS-based Single Page Application(SPA). This is a major problem with SEO for React apps and SEO for React websites. They don’t reload the whole content. Instead, they refresh the content. Thus, this technology has been playing a vital role in elevating the performance of websites ever since its introduction.

SPAs load information in a dynamic process. Thus, when the crawler clicks on a certain link, it challenges completing the page load cycle. The metadata cannot refresh. This is a major reason the crawler cannot show SPA, eventually getting indexed in an empty page format. Generally, none of these are good when it comes to ranking. However, this needs a little concern as the developers can easily resolve these issues by generating separate and individual pages for Google bots. But, here arises another challenge again. Creating individual pages gives rise to business expenses besides difficulties in ranking the website in Google’s first-page search results.

As it is known, Single Page Applications have optimized the website performance; there are several issues regarding SEO.

Want to build SEO-friendly web applications?

Connect with us today to hire React developer for building an optimum SEO website for your business.

As we have read that several factors lead to React not being SEO friendly. But, now the question arises ‘How to make react website SEO friendly?’ Well! here is the answer to how you can implement React Js SEO approach and make your React web application SEO friendly.

The Isomorphic JavaScript Technology has an automatic capacity to detect whether JavaScript on the server side is enabled or not. In cases where JavaScript is disabled, Isomorphic JavaScript works on the server side, thus providing the concluding content to the client-side server. All the required content and attributes become readily available once the page loads. However, when JavaScript is enabled, it performs like a dynamic app having several components. This enables faster loading than the conventional website, leaving the user with a smooth experience in the SPAs.

Being one of the leading approaches to making single-page and multiple-page web SEO-friendly apps, prerendering is usually used when crawlers or search bots fail to render Web pages effectively. Pretenders are unique programs that limit requests to the website. If the request is from a crawler, the prerender sends your site a cached static HTML version. If your set sends the request, the page gets loaded normally.

Advantages:

Drawbacks:

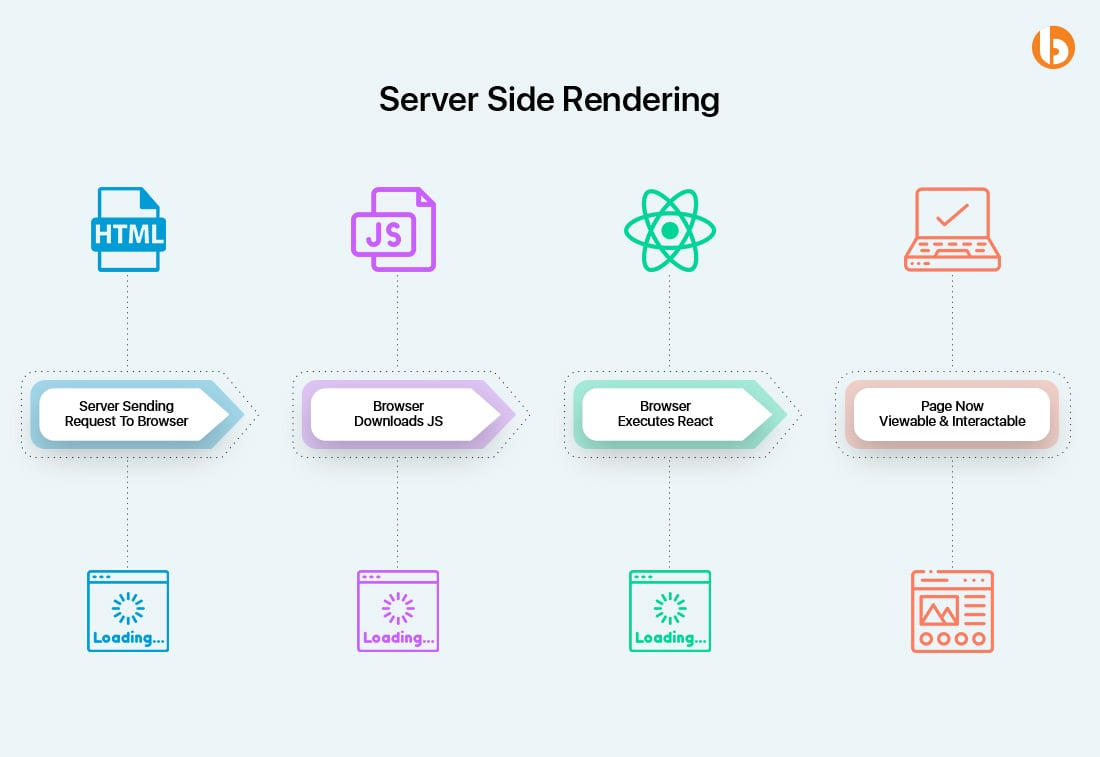

If you wish to build a React Web application, you need to have a precise piece of knowledge between client-side rendering and server-side rendering. Client-side rendering is a Googlebot and a browser that receives empty HTML Files having less or no content.

Subsequently, JavaScript code downloads the content from the servers and allows users to view it on their screens. However, the client-side rendering faces several challenges concerning SEO. This is because Google crawlers cannot view any content or view less content that is not indexed appropriately. On the contrary, with server-side rendering, the browsers and the Google Bots receive HTML files along with the whole content. This assists Google bots in performing indexing and rank higher without any hassle.

These best practices will give you answers to how to make your React website SEO-friendly:

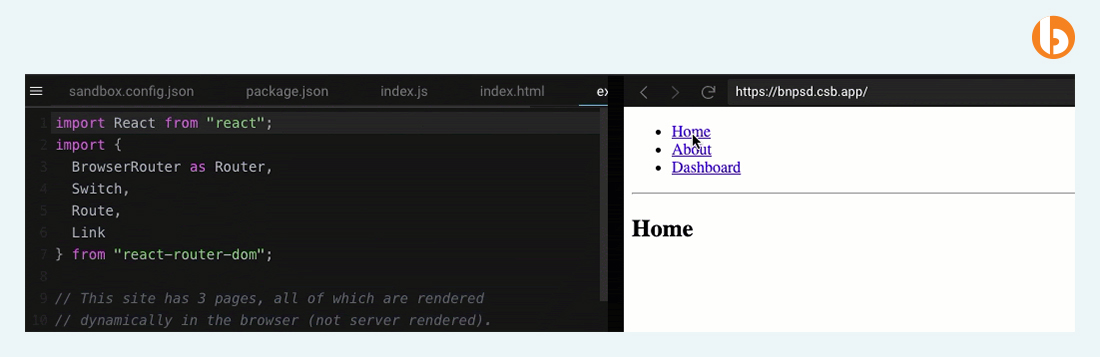

You must know, React follows a SPA (Single-Page Application). However, you can utilize the SPA model more optimally if you appropriately describe certain SEO elements and rules in your pages. This guide needs to open pages as individual URLs without the hashtag(#).

(As per Google, it cannot read URLs linked with hash, and hence it may not index any of the URLs generated with React).

Hence, we are creating URLs in a manner that opens in separate pages. We must use React Router hooks in URLs. Below is a sample of the same:

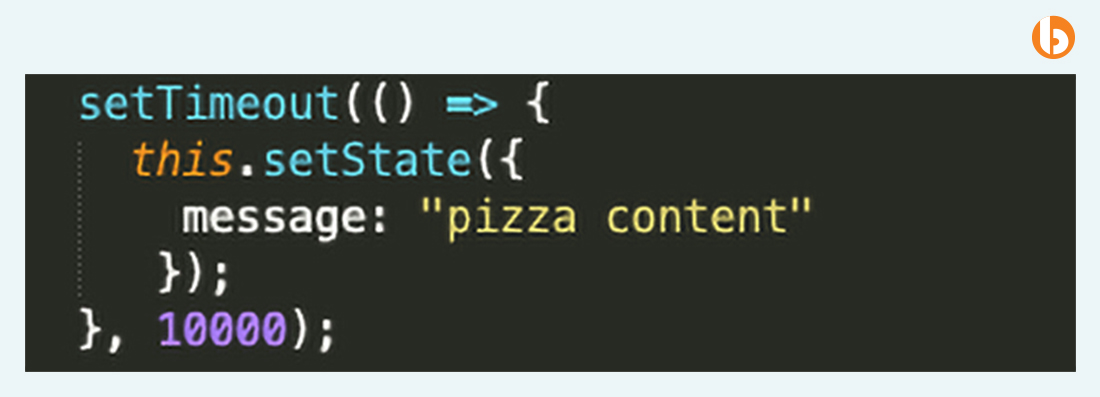

While building the content, we will recommend you not to run a process with setTimeout. In these cases, Googlebot might leave the page and website when it cannot read the content.

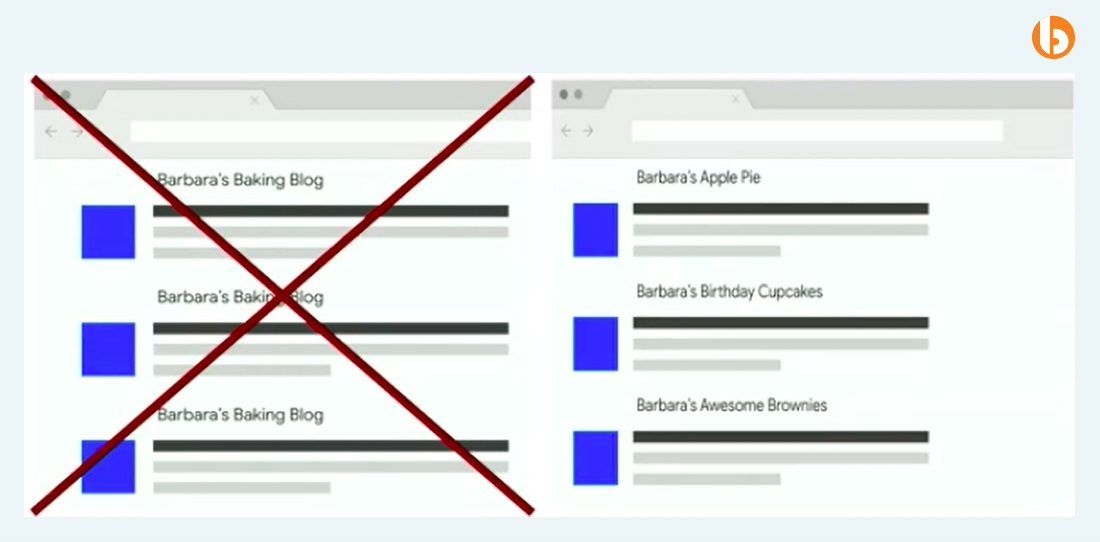

Google considers certain pages as separate pages when URLs contain lower or uppercase.

For example:

/vendi

/Vendi

These two URLs will be considered as two separate and individual pages by Google. To avoid such duplication of pages, compose every URL in lowercase.

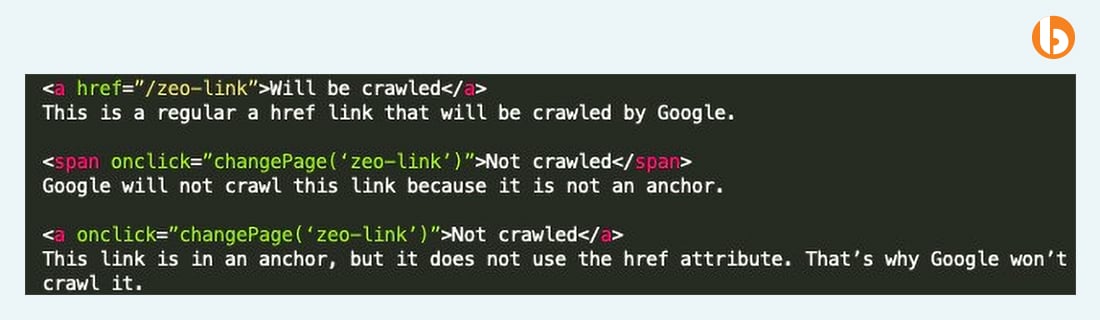

Ensure giving “a href” to links. Unfortunately, Googlebot cannot read links provided with onclick. Hence, it becomes vital to define links with a Href to make it easier for Googlebot to spot other relevant pages and visit them.

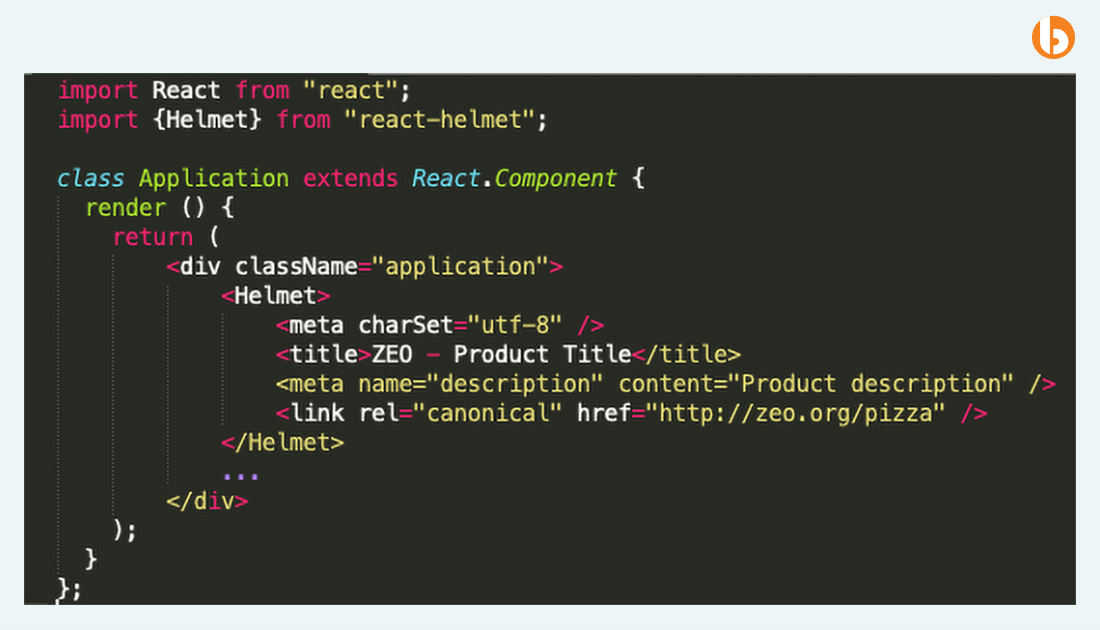

One important component of SEO is Metadata. Thus, it should pop up on the source code even when React is used. Keeping the description and title in the same structure might not be helpful enough in CTR and other scales of SEO.

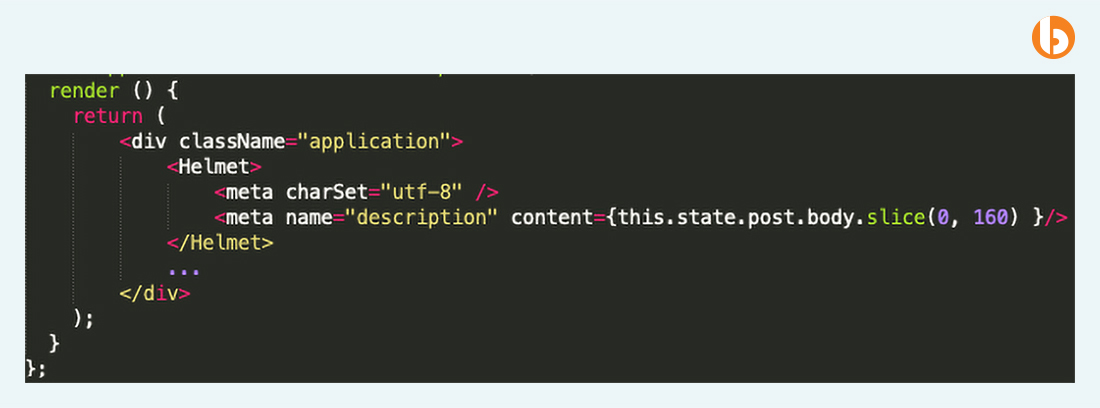

Here is when React Helmet comes to play. Below is a sample code structure along with metadata:

If the description element cannot function, try filling in the description by receiving 160 characters of the first sample of page content.

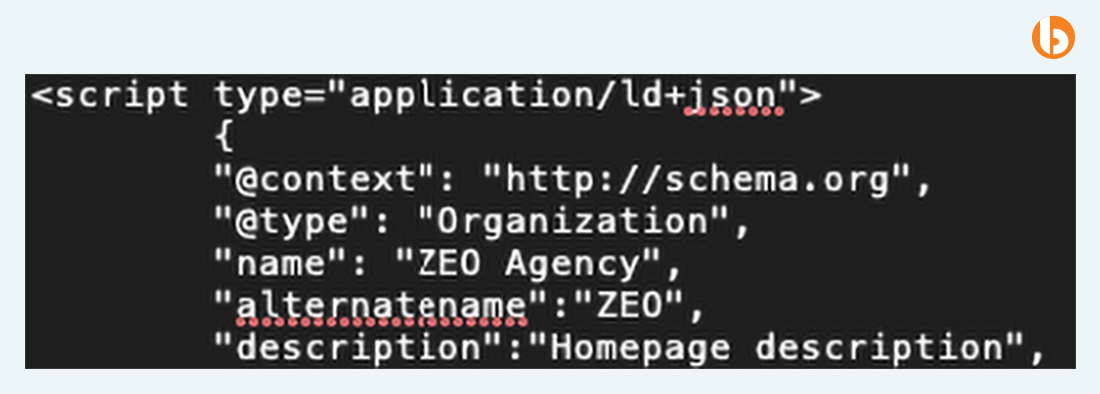

Ensure keeping structured data items in the source code along with the metadata. (Organization schema, Product, etc)

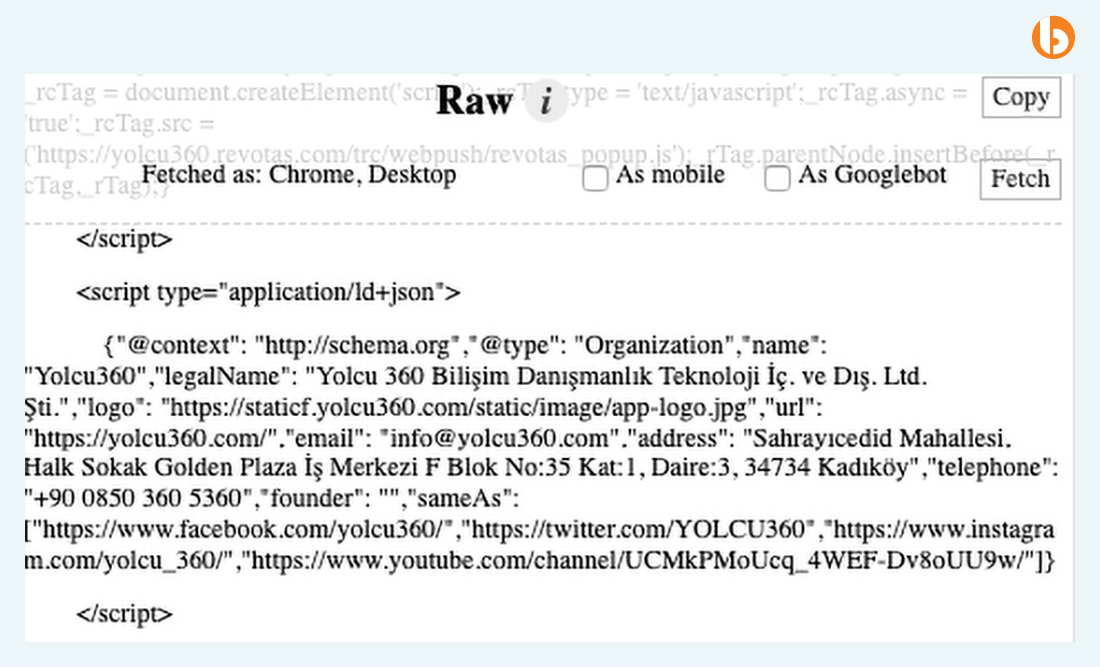

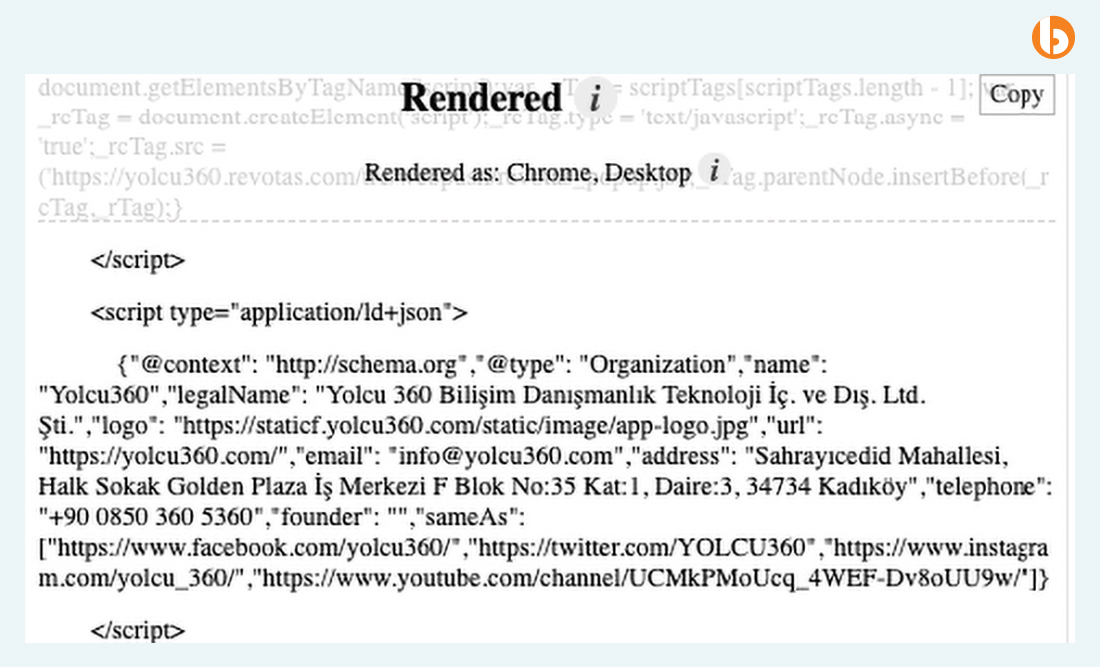

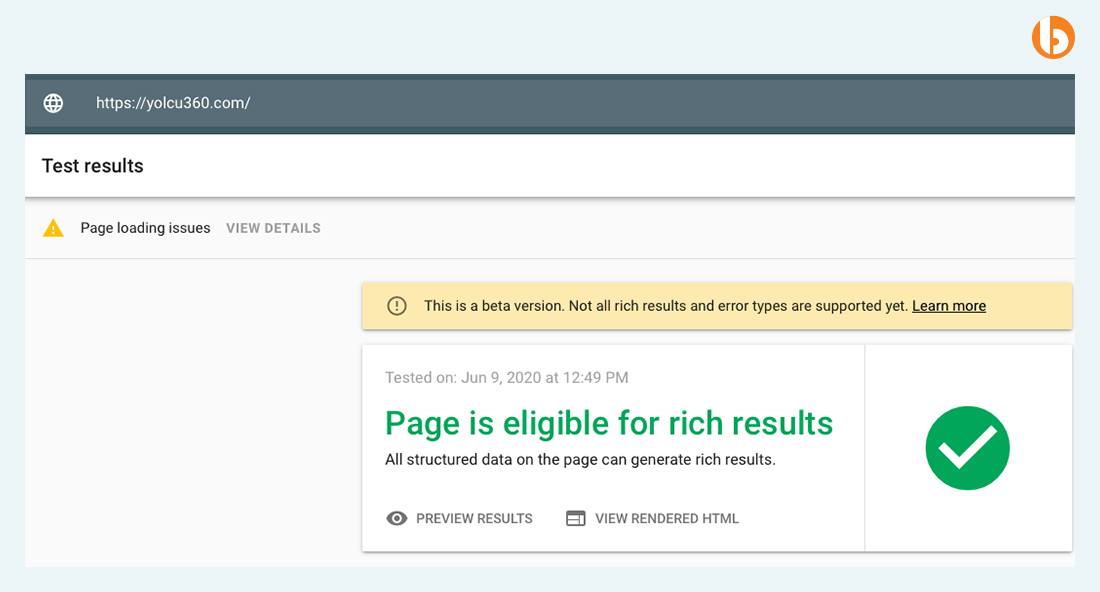

For example, below are Raw & Render versions of yolcu360.com. The structured data displays in a protected form in both versions. Ideally, we see no such difference.

Raw:

Rendered:

When you test the page with the Rich Results test tool, the structured data on your page can be easily viewed by Googlebot.

You can display metadata or content and elements in Google by operating helmet and server-side rendering together. Any deficiency or potential metadata errors can negatively impact each of the metrics present in search results.

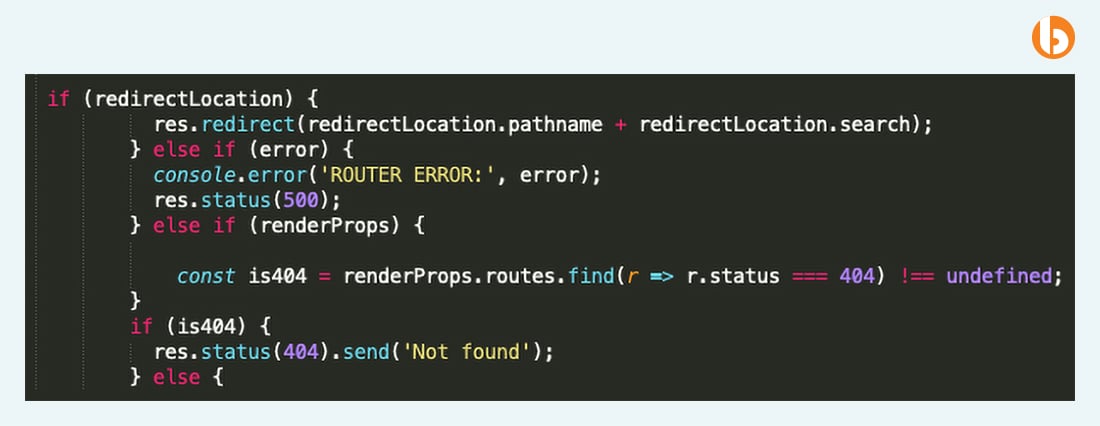

All defective pages run a 404 error code. Hence, this is a gentle reminder to set up files like server.js and route.js.

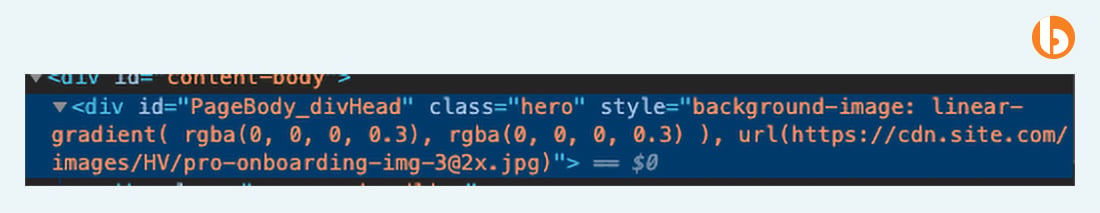

You should specify your on-page images with “img src”. As per a study, although images show up in its tools without any complications, it still cannot index them.

Correct Use:

Using anything like a CSS background with React can lead to difficulties in indexing images.

Incorrect Use:

Implementing lazyload will help users explore the websites faster and create a positive impact on our page speed score in Google.

You can find this package on npm.

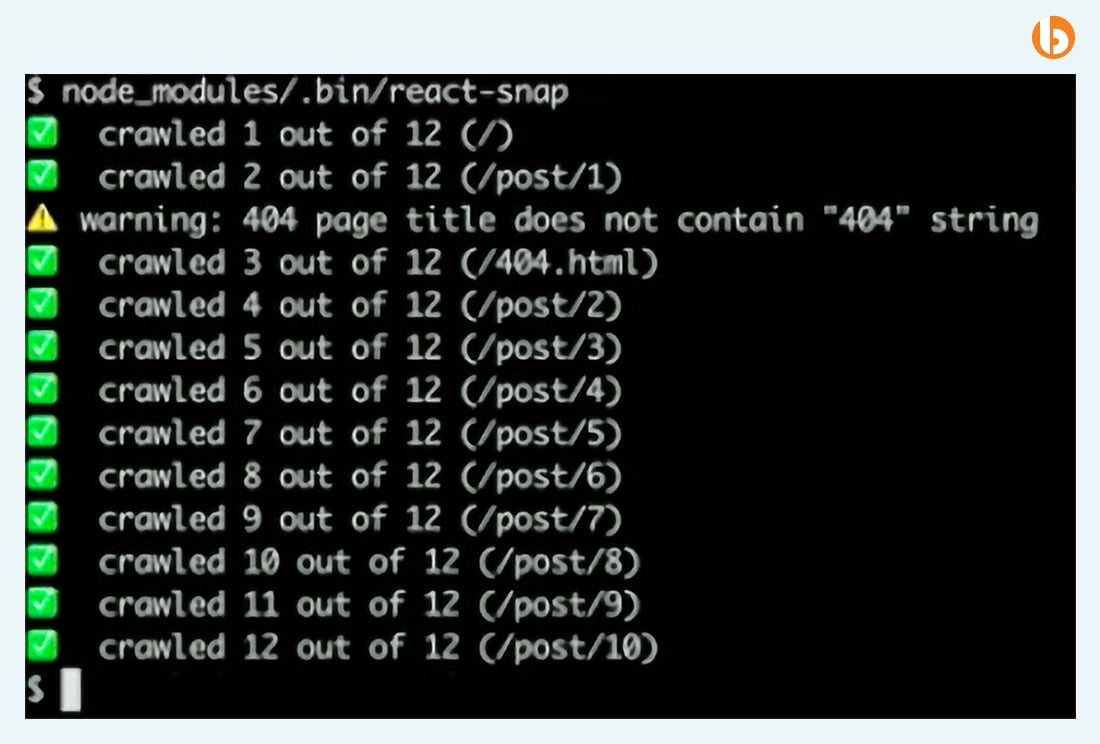

You can make use of React-Snap for performance optimization in website speed. Below is a sample:

Compared to several other JavaScript frameworks like Vue or Angular, React can obtain smaller files. It does not post unwanted and inapplicable codes. Hence, it helps in increasing the page speed tremendously. To be more precise, you can split your 2MB JS file into 60-70kb ones and run it in separate processes.

While React and SEO 2025 are widely used these days, several important terms come along with it. These include React Helmet, React Router and React-snap. However, while using JavaScript, bear in mind that Google calculates and crawls the HTML websites faster and better than the JavaScript ones. But, this does not mean Google can’t crawl JavaScript websites. The only thing to pay attention to is to be a bit careful and precisely know the potential challenges that come their way.

Bacancy Technology: foremost ReactJs development Company have proficient and dedicated React developers who have the experience and knowledge to build an optimum SEO website for your business.

Pre-rendering and Server-side rendering are the two important ways to implement SEO optimization in your React apps.

React Helmet is a library used to handle the head tag, including your web page’s page meta and language. It enables your React website to be more SEO-friendly.

The Next.js javascript framework helps your React projects to solve their SEO problems in SPAs.

React applications that can run both on server-side and client-side are known as isomorphic apps.

Yes, with NextJs, you can develop React websites that are very easy for the search engine to crawl your website.

Your Success Is Guaranteed !

We accelerate the release of digital product and guaranteed their success

We Use Slack, Jira & GitHub for Accurate Deployment and Effective Communication.