Have you ever used the voice assistant to search for your query on Google or shop by searching for super long sentences? Nowadays, search engines have become super smart as they have developed a capability to search for queries by fetching words from the sentences and filter out the accurate results.

What is a Natural Language search?

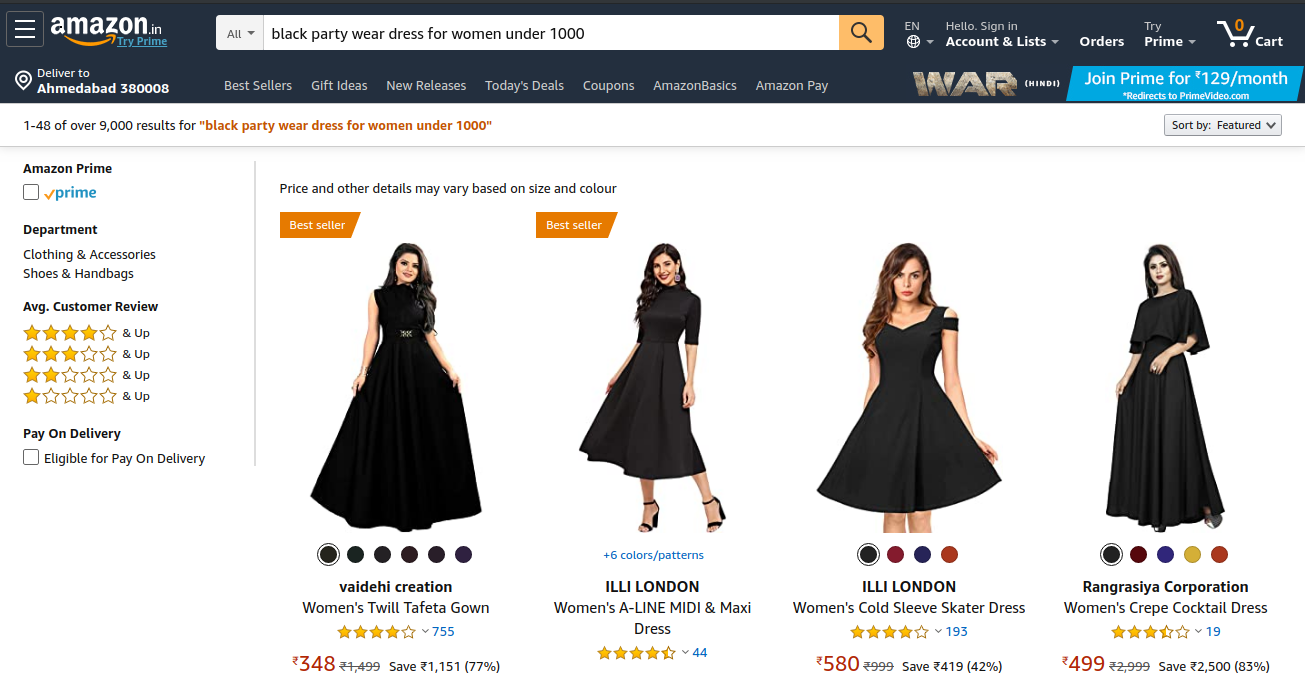

In simple words, Natural Language Search is a search where you can ask questions or a colossal sentence to search engines in your language and in your very own words. Natural language search revolves around natural language processing. As shown in the above image, I wrote a sentence ‘black party wear a dress for women under 1000’. In this sentence I asked for four different filters 1st is black color, 2nd is party dress, 3rd is for women, and 4th is under 1000 rupee, and Amazon did it very well as it provides the results that are accomplished by all these filters at least for first-page search results.

Traditional search VS Natural Language Search

In traditional search, we use ‘and,’ ‘or,’ and ‘not’ conditions play a crucial role in creating our database queries. In ‘and’ case when both conditions will match then and only then it will show the result whereas in ‘or’ case either of each condition will true, it will display the results. Here in traditional search, we have to put so much restriction for the users that they are bound to type some particular words or characters to get their desired search results, but in Natural Language Search, users can type anything. The machine can understand on its own; it reduces the trial and error part in which the user has to try different search terms again and again to get desired results.

Evolution of Natural Language Search

If you think that Natural Language Search comes in the world, then you are mistaken. Have you ever heard about START? Start is a Natural Language Question Answering System, which is developed by MIT’s Artificial Intelligence Lab. This is the world’s first Web-based question answering system online since 1993. After that, in 1996, Ask Jeeves came into the picture. Ask Jeeves to encourage users to search their queries in the form of questions. When other search engines are accepting of keywords for search, these two are ahead of time and making Natural Language Search concept in reality.

Future of Natural Language Search

In this Natural Language Search era, you must be thinking now what! But there can be something more advancement in the search, which is conversational search. It means you can have a continuous conversation with your search engine as if you are talking with your friend. You can type the sentence, or you can even speak out loud with the help of Amazon’s Alexa, Apple’s Siri, Microsoft’s Cortana and Google’s Google Home. These AI Assistance can make our search experience extremely amazing.

The process behind the scene:

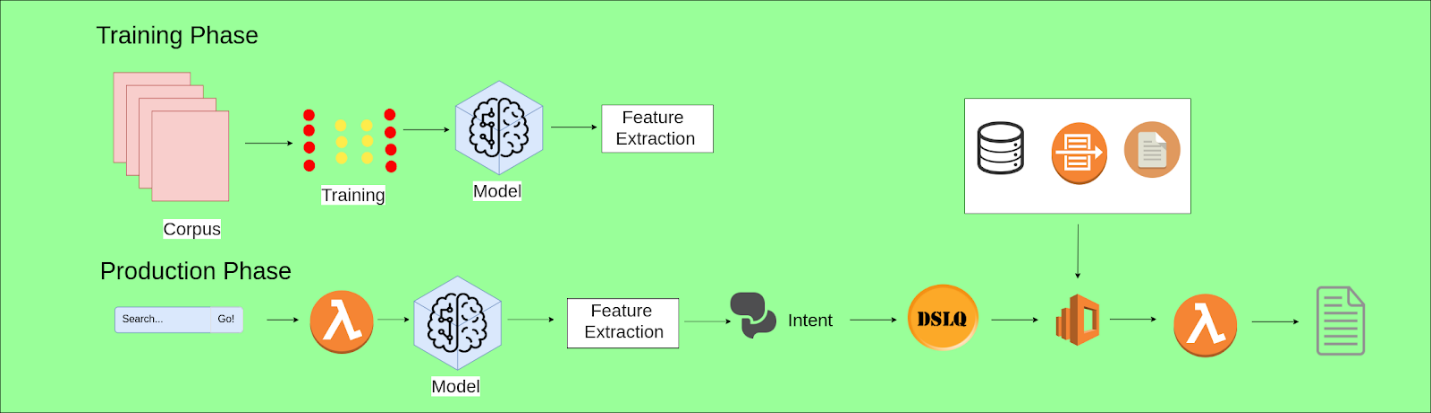

The process is divided into two-part:

1. Training phase

2. Production phase

Training phase:

Here is the deep learning that comes into play. First of all, we need to work on the corpus. By the way, the corpus is one kind of big collection of documents and I mean it’s really big! You can say a stack newspaper in software format, but you can imagine that it can be any kind of documents.

What is the use of a corpus?

Here we use a corpus as the dataset from which we are going to build a model, which is supposed to set the relation between sentence and its intent or topic. e.g. you pass some sentences to this model like “I miss my documents” –>Model will assume that you are talking about documents, but here is the twist if you train your corpus-based on banking data, it assumes like this way.

This model also uses for feature extraction i.e., if you say “Give me information for Apple stock.”

This model knows that you are talking about Apple as a company, not fruit, as well as talking about the stock price for a specific time. Technically this concept known as NLU – Natural Language understanding with Natural Entity Recognition. The whole process is known as Training. The training phase requires enormous computing power in terms of GPU, RAM, Storage.

Production Phase:

Now this phase will face so much traffic,i.e, This is a public face module so it’s obvious it needs more power in terms of scalability, high availability as well as security.

To fulfill this requirement, we use AWS cloud service with serverless architecture, AWS elastic search service, and various data source connections. When the user fires any serverless query start working on an AI-based process, the results which are predicted by the model will now pass through the feature extraction process. From these, the intents will be generated. The purpose is a significant part of natural language search.

Intents are a combination of words, which we can use to identify the search query. After that, we will create the Domain Specific Language Query, which we can directly use in Elastic search. Now the new generate elastic search query will be fire and it goes to the lambda, and lambda is directly in sync with the datasets. Here datasets can be any, it can be the database like SQL, PostgreSQL, or MySQL, it can also be the file datasets or it can be the logs sets. So, after all these processes, the user will get the desired result.

Conclusion:

If we talk about search, although it’s everywhere, mostly they all are a single word, rule-based filter oriented and non-intuitive. There is a big gap between rule-based search and natural search. Market demand is that even my grandmom should be able to search even at ease search. We being a top-notch AI development company and are able to achieve this kind of search by implementing advanced AI-driven intelligent enterprise search.