If you are a developer, this might surely have happened in the final phase of application deployment, when you notice, the production environment which will be given to the customer does not equate with the testing environment.

Of course, it is normal that this happens and is also understandable: each of the two environments has different resources, languages, libraries or even operating systems.

Imagine instead of being able to replicate a test/production environment from local to remote or vice versa, you can completely abstract the underlying hardware. Don’t you think you’re dreaming?

You can open your eyes because there is a convenient and open source solution and it’s called Docker.

And no, I’m not talking about virtualization..

Whoever you’ve never heard of Docker thinks he’s talking about the umpteenth virtualization system, which is wrong: from now on, at least in the context of Containers.

What is a Container?

In a very approximate way, we can define traditional virtualization as the possibility of an operating system equipped with Hypervisor to emulate the underlying hardware of the machine on which it is installed in order to make available to one or more machines. Which we call virtual, a complete set of applications, libraries as well as an operating system. Each virtual machine created is completely unlinked and only shares the virtual hardware made available by the hypervisor.

But why virtualize an entire operating system when you can limit yourself to a single component, application or service?

Well, this is the question that for several years several companies (such as Google in the first place) have asked themselves.

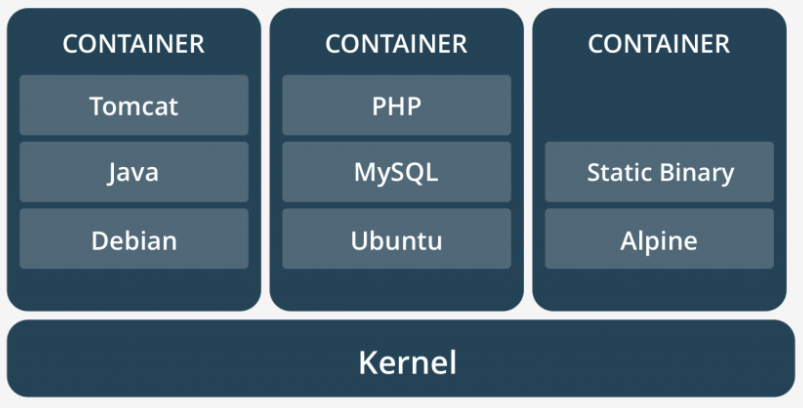

The revolutionary answer was: instead of using a virtualized hardware layer you start from the kernel (Linux) of the starting machine and only attach what you need to create specific isolated application environment, therefore called container.

Docker, in turn, took this idea and made it into an efficient system for distributing applications through a universal containerization format called DockerFile.

What is a Container Docker and the differences with a Virtual Machine

A Container is defined as a software package that already has everything it needs to run independently in an efficient and minimal way.

In practice, a container must run the same way in a Windows or Linux machine because it already has all the code, libraries, system and runtime tools it needs.

Another key feature of a Container Docker is its isolation that ensures that the code does not have dependency and environment conflicts with other Containers hosted on the same machine.

Let’s see some of the advantages of using Docker for Development

For developers and closest Docker system designers, it’s a god gift: we can literally package an application.

We take our application, we assign only the resources needed for execution and package it by happily deploying on any machine regardless of the operating system used.

1. Provisioning

As we have already seen, we are eliminating the heaviness of an emulated hardware substrate that can slow down the allocation of resources and the execution of our services.

For this reason, unlike virtual machines, we can have many containers even locally without too much impact on performance.

2. Testing

As a direct consequence of the first entry if we use this tool for testing purposes we divide the computational costs that would otherwise be proportional to the number of tests performed if we were using a cloud server, for example.

The container always uses the same assigned calculation resources, so we can do all the tests we want to keep the cost constant.

3. Administration

Suppose the development cycle of your application, a system like Docker allows you to have a quick release of new releases with a few simple commands.

4. Distribution

Instead of distributing the single application you can also decide to distribute the container that already contains the appropriate development environment, the DBMS and optional libraries that are used exclusively for your application.

This allows us to avoid updates that can undermine the use of other applications, malfunctions for different libraries, a coexistence of different versions of languages, ease of installation of the product to our end customer.

5. Modularity and Reusability

Instead of re-installing on each container the services that are common to multiple application instances can be simply contained in a container with the same services and connect them to each instance.

Conclusion

Thanks to the many benefits, Docker’s container structure is spreading in a disruptive way so much that after a couple of years the biggest companies in the world (including IBM, Google, and Facebook) are adopting it as a solution.

In addition, according to a 2015 survey on the implementation of containers by companies like Bacancy Technology, 67% of companies have already done the roll-out in these two years and even 95% say they want to develop containers in the Linux operating system to use Docker for Ruby development.

The Enterprise version of Docker “Docker Datacenter” helps large companies work more quickly through their own Docker-ready platforms. Docker describes this tool as an “integrated end-to-end platform for the development and management of applications in agile mode and on any scale”. It is, therefore, an integration tool that allows developers and operations to collaborate in the creation and development of software throughout its lifecycle.